Docker is the name given to a set of products, that provide Platform as a Service capability, to deploy our software. We use these products to deliver our software in packages referred to as “containers” using Operating System virtualization strategies. I hope that by the end of this post you can be familiar with Docker and understanding its benefits. To achieve this, I will briefly start explaining Virtual Machines and how Docker containers are an evolution of those.

It’s all about Virtual Machines

It is important to start with a simple explanation of what Virtual Machines (VMs) are. This is because, in the end, Docker containers are just an evolution of VMs.

So, what are Virtual Machines? As the name implies, it is a virtualization or emulation of a computer system. So, it’s like taking a snapshot of your computer’s current state to start instanced of it at any time. This way, you could create a Virtual Machine with a specific set of applications, do whatever you need to do, and then if you mess up something (or just need to) you will be able to go back to its original state, being the snapshot you took initially. VMs are especially useful so you can always have a machine configured in a particular way and be able to always start from that initial checkpoint or even start multiple instances of that snapshot at the same time.

This concept is not new, and it’s something that has existed for a while now; highly used in the industry. Virtual Machines have been used to be installed in servers so that you could have multiple instances in one hardware. Just imagine being able to install MySQL just once and take a snapshot. Then separately on a brand new machine you install WordPress and take a snapshot. In the end, you end up with two snapshots that you can combine into one server.

This is great! Especially for production. Installing the applications and making sure they work before taking the snapshots, reduces the risk of installation issues in production. You can get the snapshots and test them enough locally, so whenever they get to production you know they are ready and deployment will be effortless. Additionally, if you need 3 MySQL instances on different servers then you can load those snapshots in three different machines, or even on the same server. So, install it once, test it once and deploy as you like. Amazing! But then, if Virtual Machines are great, then what’s up with containers?

Containerize All Things

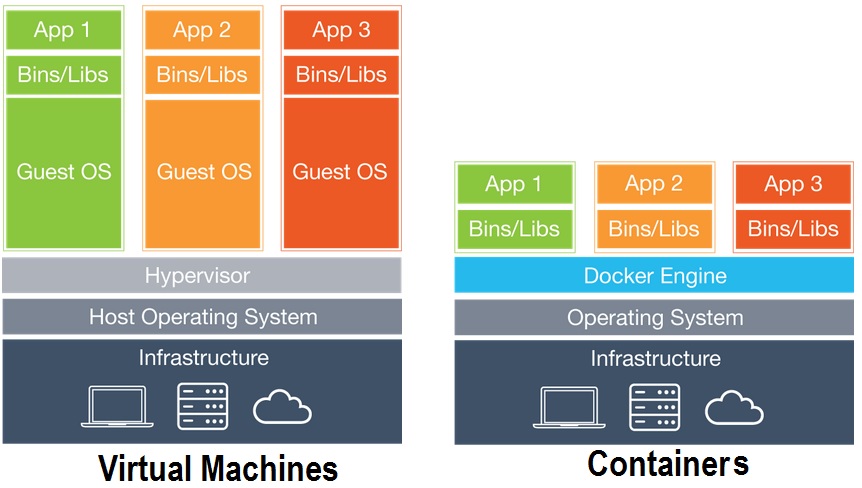

Docker containers, will just refer to as “containers”, are the evolution of the virtual machine concept. Virtual Machines have a big downside, they come with EVERYTHING that was on the machine when the snapshot was created. This snapshot includes, and is not limited to, the Operating System. The “guest” OS sits on top of the hypervisor of the “host” OS, as you can imagine that’s a big overhead. Also, as far as licensing goes, each VM is treated as its own machine. So, for Windows, you would need a license per VM you want to spin up. Do you see where I’m going?

Docker container uses the host’s Operating as its own. This means that you can just create a snapshot of the libraries and applications that you really need; which is what we call a “container”. Containers tend to be as small as it gets to the point that you can get a container running in seconds! It is even technically possible to install Linux applications on a windows docker host, but I won’t get into that right now. In the end, using Docker we actually end up saving some resources and OS licenses, and in the end some ca$h.

Docker Ecosystem

There are a few things that I always consider when using a new application, framework, service, or language. How well the ecosystem is documented, how often are releases/improvements/bug fixes made public and how big is the community around it are indications of a good/bad platform.

Lucky enough, Docker has a HUGE ecosystem with a big community supporting it and lots of documentation. I’m not afraid to say that Docker containers are the new standard for IT Operations. It’s a good time to play around with it since it’s a must know, not only for operations engineers but also for developers and even managers!

What’s after Docker?

So Docker is great for packaging our applications, has a great startup time and we have better use of server resources. Having an “orchestrator” is the next step you should take after learning Docker. An “orchestrator” helps us do better use of our resources. Orchestrators can help scale up/down automatically when there is a need. They also help to distribute load among multiple different servers. But this is a whole different subject I will have to write about later.

Leave a Reply